Technology R&D Projects

TR&D projects guide CAI2R research on better ways to gather medical image data, process information, and inform care.

The projects focus on novel image acquisition and reconstruction methods, hardware and sensors, and biophysical modeling of tissue properties.

CAI2R is pushing the field toward an imaging paradigm in which fast, intelligent scans deliver richly informative, actionable health data. Our research is guided by four Technology R&D Projects, or TR&Ds.

Jump to:

Active Technology R&D Projects

The current TR&D Projects, summarized below, have laid the foundation for our Center’s new, evolved TR&Ds proposed for 2024-2029, briefly described above.

TR&D 1

Reimagining the Future of Scanning: From snapshots to streaming, from imitating the eye to emulating the brain

Principal investigator: Daniel K. Sodickson, MD, PhD

We are developing technologies that move biomedical imaging toward fast, simple, universal acquisitions that yield quantitative parameters sensitive to specific disease processes.

The present MRI paradigm can be likened to that of early photography, which required the photographer to manually determine camera settings, the subjects to remain motionless during exposure, and the exposed plate to undergo development at a later time. Similarly, today’s MRI requires technologists to manage complex controls, patients to hold still in the scanner, and the acquired data to undergo reconstruction into human-readable images. Imaging exams typically consist of start-and-stop scan protocols—like series of snapshots—with “dead times” between acquisitions. Standard reconstruction algorithms lack the intelligence to correct flaws caused by operator error or patient motion.

In this project, we investigate and develop MRI methods more analogous to contemporary digital photography and video streaming. We create intelligent algorithms to dynamically guide data acquisition and reconstruction in order to deliver better and more informative images. The flexibility of such methods also has the potential to free technologists from managing intricate scan settings and to liberate patients from the burden of having to hold still for long periods.

Our core expertise in pulse-sequence design, parallel imaging, compressed sensing, model-based image reconstruction, and machine learning, allows us to question long-established assumptions about data acquisition, image formation, and even scanner design.

This project has led to substantial breakthroughs in rapid, continuous, comprehensive MRI methods, in machine learning approaches to MR data acquisition and reconstruction, and in artificial intelligence (AI) algorithms for medical image analysis.

Some of our advances are already clinically available worldwide. For example, GRASP, a technique that produces clear MR images without requiring patients to hold their breath during exams, ships on Siemens MRI scanners under the name Compressed Sensing GRASP-VIBE.

CAI2R scientists were among the first to demonstrate the use of deep learning for reconstruction of MR data, and the first to show that such reconstructions are diagnostically interchangeable with traditional ones. Our scientists, through collaborations with NYU Center for Data Science, Facebook AI Research, and other research partners, are also advancing AI approaches to analysis and interpretation of medical image data.

Explore TR&D 1 on NIH RePORTER.

Note: At the time of founding of our Center, in September 2014, this project was titled “Toward Rapid Continuous Comprehensive MR Imaging: New Methods, New Paradigms, and New Applications.” In August 2019, when the NIH renewed our NCBIB mandate, the name was updated in order to reflect new goals.

To the top ↑TR&D 2

Unshackling the Scanners of the Future: From rigid control to flexible sensor-rich navigation

Principal investigators: Ryan Brown, PhD; Christopher M. Collins, PhD

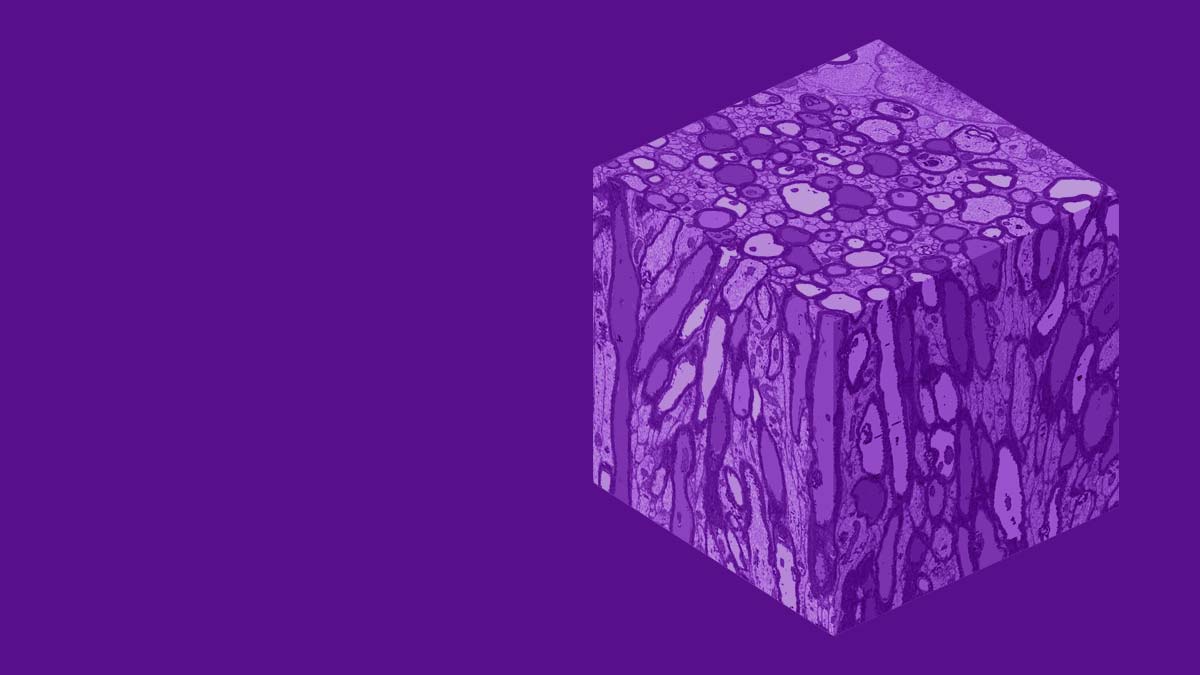

We are developing radiofrequency (RF) hardware and novel MRI methods that introduce unprecedented flexibility to imaging.

The evolution of MRI technology has tended toward progressively greater control over the electromagnetic conditions in which imaging data are acquired. In pursuit of stronger signal, higher resolution, and fewer image artifacts, the discipline has converged on rigid, complex coil arrays and painstakingly calibrated magnetic fields.

Our research has led us to envision a different philosophy—one aimed at creating flexible hardware and navigating rather than controlling inhomogeneities of the scanner environment.

CAI2R is also developing ultra-flexible RF coils based on a novel technology invented by our scientists. This new type of imaging array dissolves long-time constraints on array design, which demanded that geometrically intricate arrangements of coil elements be meticulously optimized to cancel out couplings, and then rigidly preserved in the optimal configuration.

Taking advantage of principles derived from MR fingerprinting, CAI2R scientists have described a new method for multiparametric imaging with heterogenous frequency fields. The technique, called Plug-and-Play MR fingerprinting (PnP-MRF) departs from the convention of carefully calibrated superimpositions of RF fields from distinct transmit elements. Instead, PnP-MRF creates a diverse distribution of transmit fields in a way that excites all areas of the target field of view and maps the inhomogenous field distributions along parameters of interest. The method is now in translational research phase, with a work-in-progress package available on select Siemens scanners.

Beyond these technologies, we are also exploring what information can be gleaned from new types of sensors. Just as self-driving cars continuously probe their environment with LIDAR sensors, “self-driving scanners” may one day be able to navigate through substantial inhomogeneities and dynamic variations if outfitted with a sufficient number and variety of sensing mechanisms. One example is the Pilot Tone motion-detection system developed in TR&D 3. Other candidates may include ultrasound transducers and 3D cameras.

Explore TR&D 2 on NIH RePORTER.

Note: At the time of founding of our Center, in September 2014, this project was titled “Radiofrequency Field Interactions with Tissue: New Tools for RF Design, Safety, and Control.” In August 2019, when the NIH renewed our NCBIB mandate, the name was updated in order to reflect new goals.

To the top ↑TR&D 3

Enriching the Data Stream: MR and PET in concert

Principal investigators: Hersh Chandarana, MD, MBA; Giuseppe Carlucci, PhD (via subcontract with UCLA)

If we think of medical imaging as extension of human sight, we may say that combining MRI with positron emission tomography (PET) is analogous to supplementing sight with other senses. Just as visual, auditory, tactile, and other sensations are simultaneously processed and interpreted by the brain into actionable information, we envision a multisensory scanner that generates complementary data streams to be interpreted with the aid of artificial intelligence (AI).

Recent advances in computing power, machine learning, and sensor miniaturization have created an opportunity to augment traditional MRI acquisitions with new, informative data. Our scientists are exploring the possibilities of outfitting scanners with non-traditional technologies to create multisensory imaging machines. In one such approach, we developed a portable stand-alone sensor to detect patient respiratory motion and include the resulting data in the MR acquisition. The device, called Pilot Tone, can be used to correct images for the effects of breathing motion and is now being tested on select Siemens systems.

CAI2R researchers are also investigating how AI may be used in joint image reconstruction of MR and PET data to leverage complementary information from each modality. We are exploring the use of machine learning algorithms to reduce partial voluming effects in PET images and to reconstruct list mode data from low-specific-activity radiopharmaceuticals.

Our scientists are also developing radiotracers specifically intended for simultaneous PET and MRI acquisition and joint image reconstruction, and we support a number of Collaborative Projects with custom 11C and 18F radiotracers produced in our state-of-the-art radiochemistry facility.

Explore TR&D 3 on NIH RePORTER.

Note: At the time of founding of our Center, in September 2014, this project was titled “Advancing MR and PET through Synergistic Simultaneous Acquisition and Joint Resonstruction.” In August 2019, when the NIH renewed our NCBIB mandate, the name was updated in order to reflect new goals.

To the top ↑TR&D 4

Revealing Microstructure: Biophysical modeling and validation for discovery and clinical care

Principal investigators: Els Fieremans, PhD; Dmitry Novikov, PhD

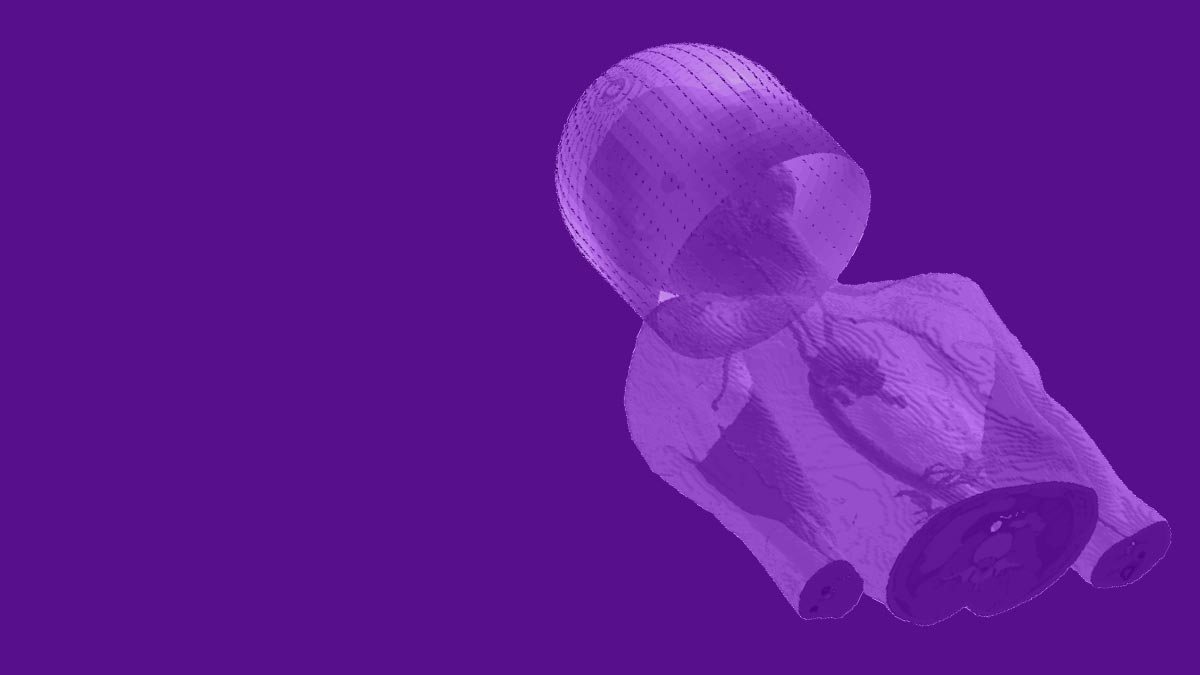

We are developing imaging techniques to uncover information about tissues at the cellular level, also called the mezoscale, where disease processes often originate but evade detection.

Although tissue microstructure lies beyond the image resolution limit of MRI, properties of the cellular environment do inform MR signal, which originates at an even smaller scale of molecules. We develop sophisticated biophysical models, imaging methods, and validation strategies to identify signatures of specific microstructural features in MR signal and to create clinically viable ways of mapping such properties.

Our researchers have published seminal work that elucidates the mesoscale origins of diffusion-weighted MRI data and identifies parameters within which the data carry reliable information. In deriving new functional forms that capture such information, we use methodology borrowed from fundamental physics. To validate theoretical developments, we employ leading-edge validation tools, including electron microscopy and computational neuroanatomy. Our scientists have also created state-of-the-art denoising methods.

In particular, we develop and validate tissue- and disease-specific models of neurodegeneration, muscular disorders, and cancer. These models are intended to provide the physical and biological groundwork for the development of imaging methods across other areas of our research. We are also exploring several test cases in which microstructure mapping may be used to inform diagnosis and therapy, such as to reduce overtreatment of prostate cancer or to improve neurosurgical planning.

Explore TR&D 4 on NIH RePORTER.

Note: This project launched in August 2019, when the NIH renewed our NCBIB mandate.

To the top ↑